容器化部署Kafka的最佳实践

本文最后更新于 2024-07-24,文章内容可能已经过时。

一、docker 部署kafka单节点

1.1安装docker

可以参考这篇CentOS 7安装docker并配置镜像加速

1.2安装kafka & zookeeper版本

1.2.1 运行zookeeper

docker run -d --name zookeeper -p 2181:2181 swr.cn-east-3.myhuaweicloud.com/srebro/middleware/zookeeper:3.4.131.2.2 运行kafka(注意修改zookeeper,kafka地址)

docker run -d --name kafka -p 9092:9092 -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=172.16.10.157:2181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://localhost:9092 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 swr.cn-east-3.myhuaweicloud.com/srebro/middleware/kafka:2.13-2.8.11.2.3 登录kafka容器,创建topic

docker exec -it kafka bash

cd /opt/kafka_2.13-2.8.1/bin

./kafka-topics.sh --zookeeper 172.16.10.37:2181 --create --topic mytestTopic1 --replication-factor 1 --partitions 31.2.4 登录kafka容器,启动一个生产者

docker exec -it kafka bash

cd /opt/kafka_2.13-2.8.1/bin

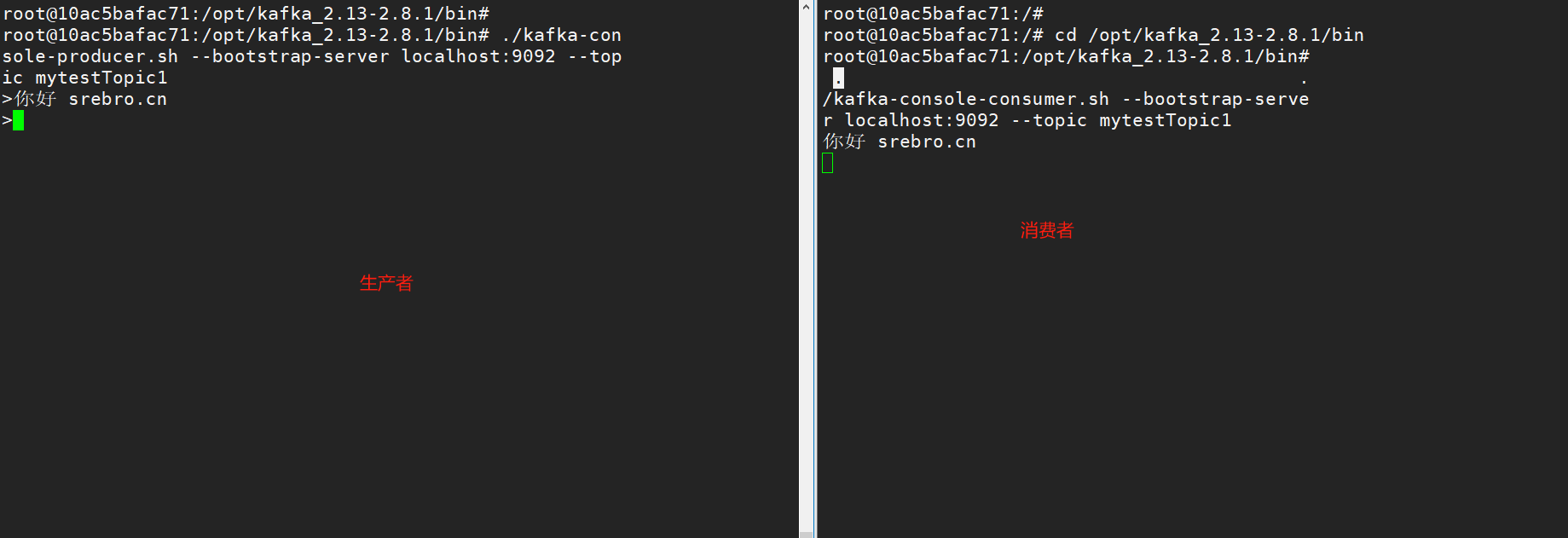

./kafka-console-producer.sh --bootstrap-server localhost:9092 --topic mytestTopic11.2.5 新开一个终端窗口,登录kafka容器,启动一个消费者

docker exec -it kafka bash

cd /opt/kafka_2.13-2.8.1/bin

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic mytestTopic11.2.6 模拟在生产者上,测试几条数据,观察消费者上有没有收到数据

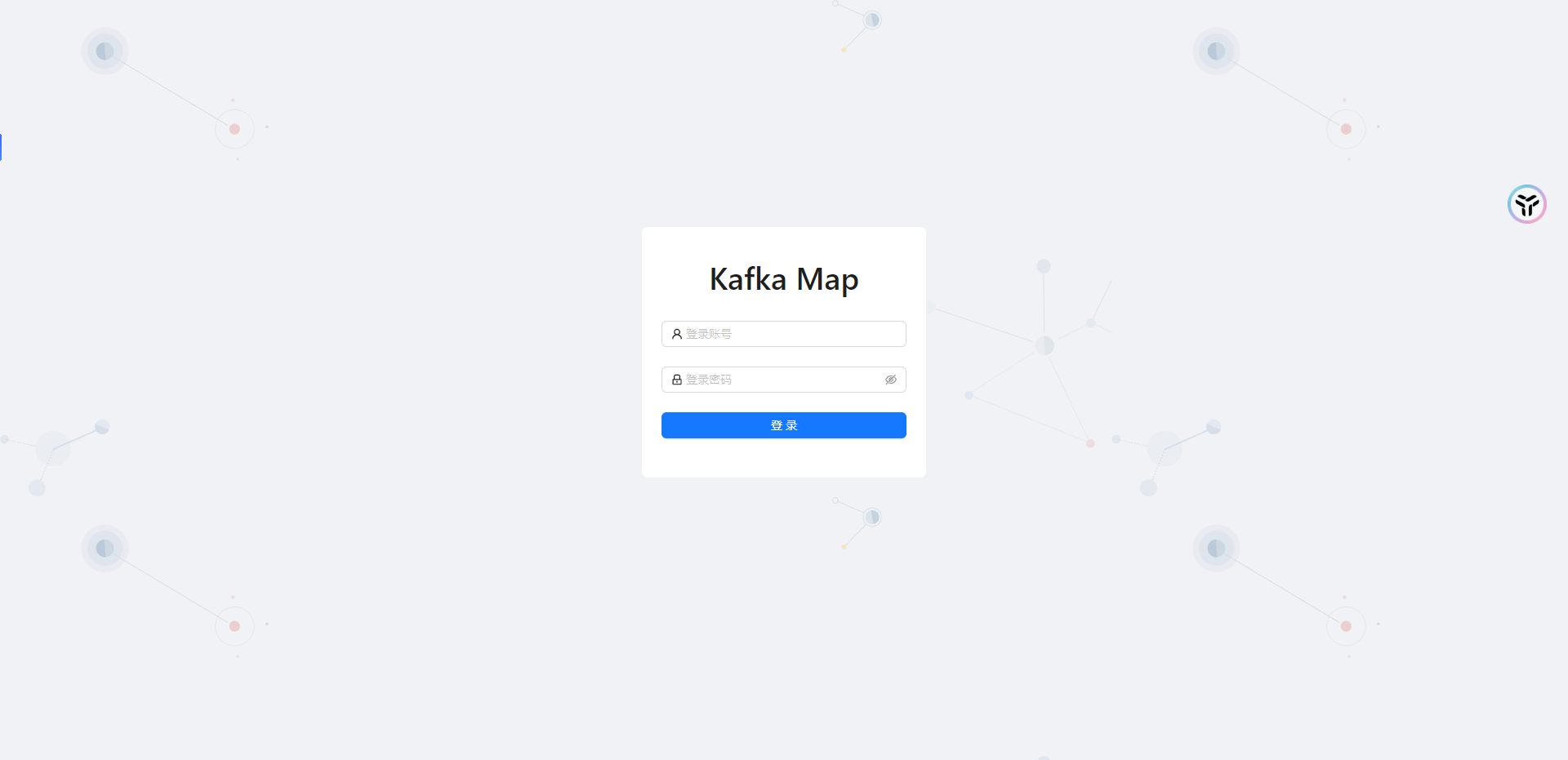

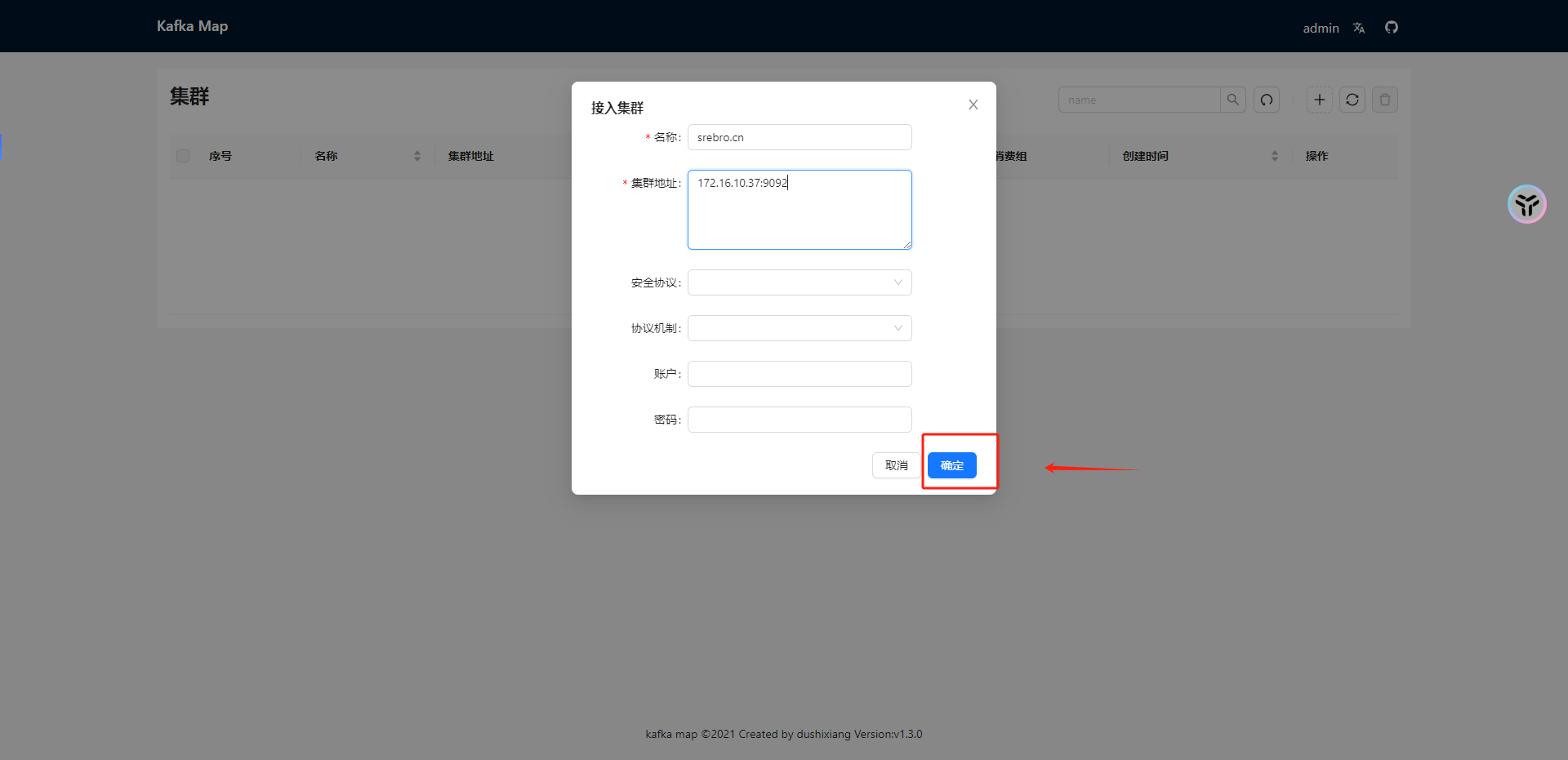

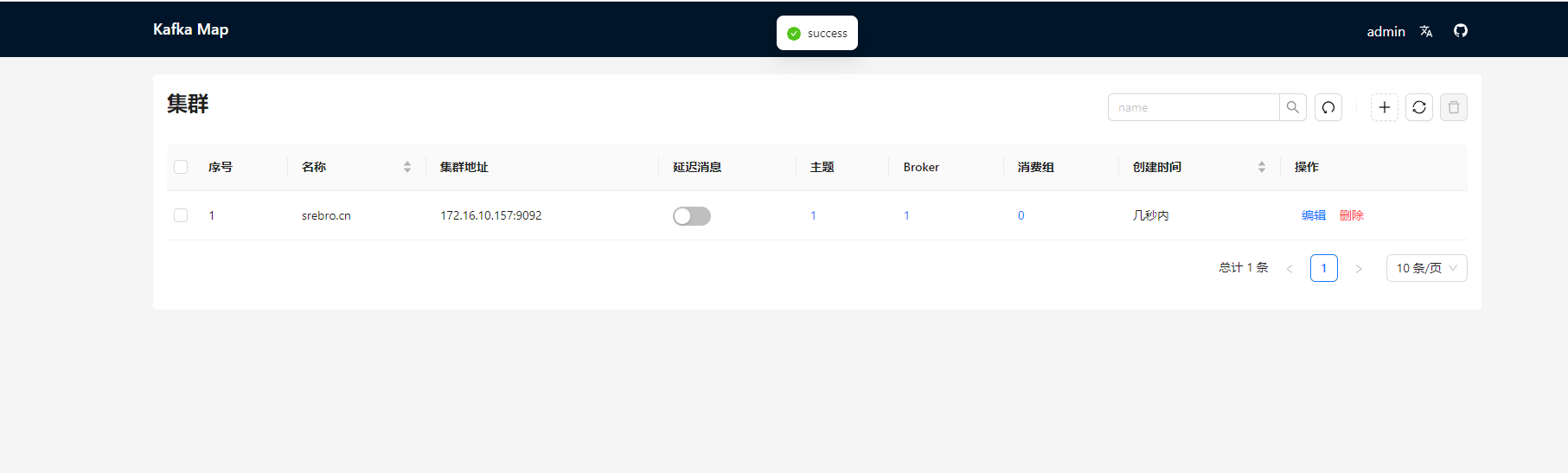

1.2.7 kafka map 管理工具

A beautiful, concise and powerful kafka web management tool. 一个美观简洁且强大的kafka web管理工具。

docker run -d \

-p 8080:8080 \

-v /opt/kafka-map/data:/usr/local/kafka-map/data \

-e DEFAULT_USERNAME=admin \

-e DEFAULT_PASSWORD=admin \

--name kafka-map \

--restart always dushixiang/kafka-map:latest

1.3 安装kafka3.6.0[不需要zookeeper]

1.3.1 编写Dockerfile

可以自己构建Dockerfile,或者使用我的镜像 swr.cn-east-3.myhuaweicloud.com/srebro/middleware/kafka-kraft:3.6.0

install_kafka.sh

#!/usr/bin/env bash

# 设置 Kafka 的版本和 Scala 版本的路径

path=/kafka/${KAFKA_VERSION}/kafka_${SCALA_VERSION}-${KAFKA_VERSION}.tgz

# 使用 curl 命令调用 Apache 的 closer.cgi 脚本,获取最近的镜像站点的 URL

downloadUrl=$(curl --stderr /dev/null "https://www.apache.org/dyn/closer.cgi?path=${path}&as_json=1" | jq -r '"\(.preferred)\(.path_info)"')

# 检查得到的 URL 是否有效,如果无效,则使用 Apache 的归档站点 URL

if [[ ! $(curl -sfI "${downloadUrl}") ]]; then

downloadUrl="https://archive.apache.org/dist/${path}"

fi

# 使用 wget 命令下载 Kafka,并将其保存到 /tmp/kafka.tgz

wget "${downloadUrl}" -O "/tmp/kafka.tgz"

# 解压 Kafka 到 /opt 目录

tar xfz /tmp/kafka.tgz -C /opt

# 删除下载的压缩包

rm /tmp/kafka.tgz

# 创建一个符号链接,指向 Kafka 的安装目录

ln -s /opt/kafka_${SCALA_VERSION}-${KAFKA_VERSION} /opt/kafka

start_kafka.sh

#!/usr/bin/env bash

if [[ -z "$KAFKA_LISTENERS" ]]; then

echo 'Using default listeners'

else

echo "Using listeners: ${KAFKA_LISTENERS}"

sed -r -i "s@^#?listeners=.*@listeners=$KAFKA_LISTENERS@g" "/opt/kafka/config/kraft/server.properties"

fi

if [[ -z "$KAFKA_ADVERTISED_LISTENERS" ]]; then

echo 'Using default advertised listeners'

else

echo "Using advertised listeners: ${KAFKA_ADVERTISED_LISTENERS}"

sed -r -i "s@^#?advertised.listeners=.*@advertised.listeners=$KAFKA_ADVERTISED_LISTENERS@g" "/opt/kafka/config/kraft/server.properties"

fi

if [[ -z "$KAFKA_LISTENER_SECURITY_PROTOCOL_MAP" ]]; then

echo 'Using default listener security protocol map'

else

echo "Using listener security protocol map: ${KAFKA_LISTENER_SECURITY_PROTOCOL_MAP}"

sed -r -i "s@^#?listener.security.protocol.map=.*@listener.security.protocol.map=$KAFKA_LISTENER_SECURITY_PROTOCOL_MAP@g" "/opt/kafka/config/kraft/server.properties"

fi

if [[ -z "$KAFKA_INTER_BROKER_LISTENER_NAME" ]]; then

echo 'Using default inter broker listener name'

else

echo "Using inter broker listener name: ${KAFKA_INTER_BROKER_LISTENER_NAME}"

sed -r -i "s@^#?inter.broker.listener.name=.*@inter.broker.listener.name=$KAFKA_INTER_BROKER_LISTENER_NAME@g" "/opt/kafka/config/kraft/server.properties"

fi

uuid=$(/opt/kafka/bin/kafka-storage.sh random-uuid)

/opt/kafka/bin/kafka-storage.sh format -t $uuid -c /opt/kafka/config/kraft/server.properties

/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/kraft/server.propertiesDockerfile

FROM alpine:3.16 AS builder

RUN echo "http://mirrors.nju.edu.cn/alpine/v3.16/main" > /etc/apk/repositories \

&& echo "http://mirrors.nju.edu.cn/alpine/v3.16/community" >> /etc/apk/repositories

ENV KAFKA_VERSION=3.6.0

ENV SCALA_VERSION=2.13

COPY install_kafka.sh /bin/

RUN apk update \

&& apk add --no-cache bash curl jq \

&& /bin/install_kafka.sh \

&& apk del curl jq

FROM alpine:3.16

RUN echo "http://mirrors.nju.edu.cn/alpine/v3.16/main" > /etc/apk/repositories \

&& echo "http://mirrors.nju.edu.cn/alpine/v3.16/community" >> /etc/apk/repositories

RUN apk update && apk add --no-cache bash openjdk11-jre

COPY --from=builder /opt/kafka /opt/kafka

COPY start_kafka.sh /bin/

CMD [ "/bin/start_kafka.sh" ]1.3.2 构建镜像

docker build -t XXXX/kafka-kraft .

1.3.3 运行kafka

docker run -d --name kafka -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://172.16.10.37:9092 -p 9092:9092 -v /home/application/Middleware/kafka/data:/var/lib/kafka/data --restart=always swr.cn-east-3.myhuaweicloud.com/srebro/middleware/kafka-kraft:3.6.01.3.4 运行kafka-map 管理工具

docker run -d \

-p 19006:8080 \

-v /opt/kafka-map/data:/usr/local/kafka-map/data \

-e DEFAULT_USERNAME=admin \

-e DEFAULT_PASSWORD=admin \

--name kafka-map \

--restart always dushixiang/kafka-map:latest

二、docker-compose 部署kafka单节点

2.1 安装docker-compose

可以参考这篇Cenotos7 安装docker-compose

2.2 编排docker-compose文件

注意先要创建docker 单独的网络

创建自定义网络 : docker network create -d bridge --subnet "192.168.10.0/24" --gateway "192.168.10.1" srebro.cn

kafka & zookeeper版本

version: '3'

services:

zookeeper:

image: swr.cn-east-3.myhuaweicloud.com/srebro/middleware/zookeeper:3.4.13

networks:

- srebro.cn

volumes:

- /home/application/Middleware/zookeeper-kafka/zookeeper/data:/data

container_name: zookeeper

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ports:

- 2181:2181

restart: 'unless-stopped'

kafka:

image: swr.cn-east-3.myhuaweicloud.com/srebro/middleware/kafka:2.13-2.8.1

container_name: kafka

networks:

- srebro.cn

depends_on:

- zookeeper

ports:

- 9092:9092

volumes:

- /home/application/Middleware/zookeeper-kafka/kafka/data:/kafka

environment:

KAFKA_CREATE_TOPICS: "test"

KAFKA_BROKER_NO: 0

KAFKA_LISTENERS: PLAINTEXT://kafka:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://172.16.10.157:9092

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_HEAP_OPTS: "-Xmx512M -Xms16M"

restart: 'unless-stopped'

kafka-map:

image: dushixiang/kafka-map:latest

networks:

- srebro.cn

volumes:

- "./kafka-map/data:/usr/local/kafka-map/data"

ports:

- 9006:8080

environment:

DEFAULT_USERNAME: admin

DEFAULT_PASSWORD: 123456

depends_on:

- zookeeper

- kafka

restart: 'unless-stopped'

networks:

srebro.cn:

external: truekafka3.6.0 [不需要zookeeper] 版本

version: '3'

services:

kafka:

image: swr.cn-east-3.myhuaweicloud.com/srebro/middleware/kafka-kraft:3.6.0

networks:

- srebro.cn

environment:

#KAFKA_LISTENERS: PLAINTEXT://:9092 # Kafka 监听端口配置

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://172.16.10.201:9092 # Kafka 对外公布的地址

#KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT # 监听器与安全协议的映射

#KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT # Broker 间通信使用的监听器

ports:

- "9092:9092"

volumes:

- /home/application/Middleware/kafka/data:/var/lib/kafka/data

restart: always

kafka-map:

image: dushixiang/kafka-map:latest

networks:

- srebro.cn

volumes:

- "./kafka-map/data:/usr/local/kafka-map/data"

ports:

- 19006:8080

environment:

DEFAULT_USERNAME: admin

DEFAULT_PASSWORD: 123456

depends_on:

- kafka

restart: 'unless-stopped'

networks:

srebro.cn:

external: true

三、常见问题

3.1 如果zookeeper 容器无法正常运行,报错:

zookeeper | library initialization failed - unable to allocate file descriptor table - out of memory/usr/bin/start-zk.sh: line 4: 12 Aborted (core dumped) /opt/zookeeper-3.4.13/bin/zkServer.sh start-foreground无法分配文件描述符,一般是因为操作系统层面和docker 需要配置Limit

(1)、操作系统层面优化:

cat >> /etc/security/limits.conf << eof

root soft nofile 65535

root hard nofile 65535

root soft nproc 65535

root hard nproc 65535

root soft core unlimited

root hard core unlimited

* soft nofile 65535

* hard nofile 65535

* soft nproc 65535

* hard nproc 65535

* soft core unlimited

* hard core unlimited

eof

进入到/etc/security/limits.d/下

删除 *-nproc.conf

否则生效的为/etc/security/limits.d/下 文件的配置

cd /etc/security/limits.d/

rm -rf *-nproc.conf(2)、Docker层面优化:

vim /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=65535

LimitNPROC=65535

LimitCORE=65535

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target四、参考

- 感谢你赐予我前进的力量

赞赏者名单

因为你们的支持让我意识到写文章的价值🙏

本文是原创文章,采用 CC BY-NC-ND 4.0 协议,完整转载请注明来自 运维小弟